AI/ML/GenAI on AWS

Venue: Bitexco Financial Tower

Date: Saturday, November 15, 2025

Event Objectives

- Provide a practical introduction to AI/ML/GenAI capabilities on AWS with a focus on Amazon Bedrock

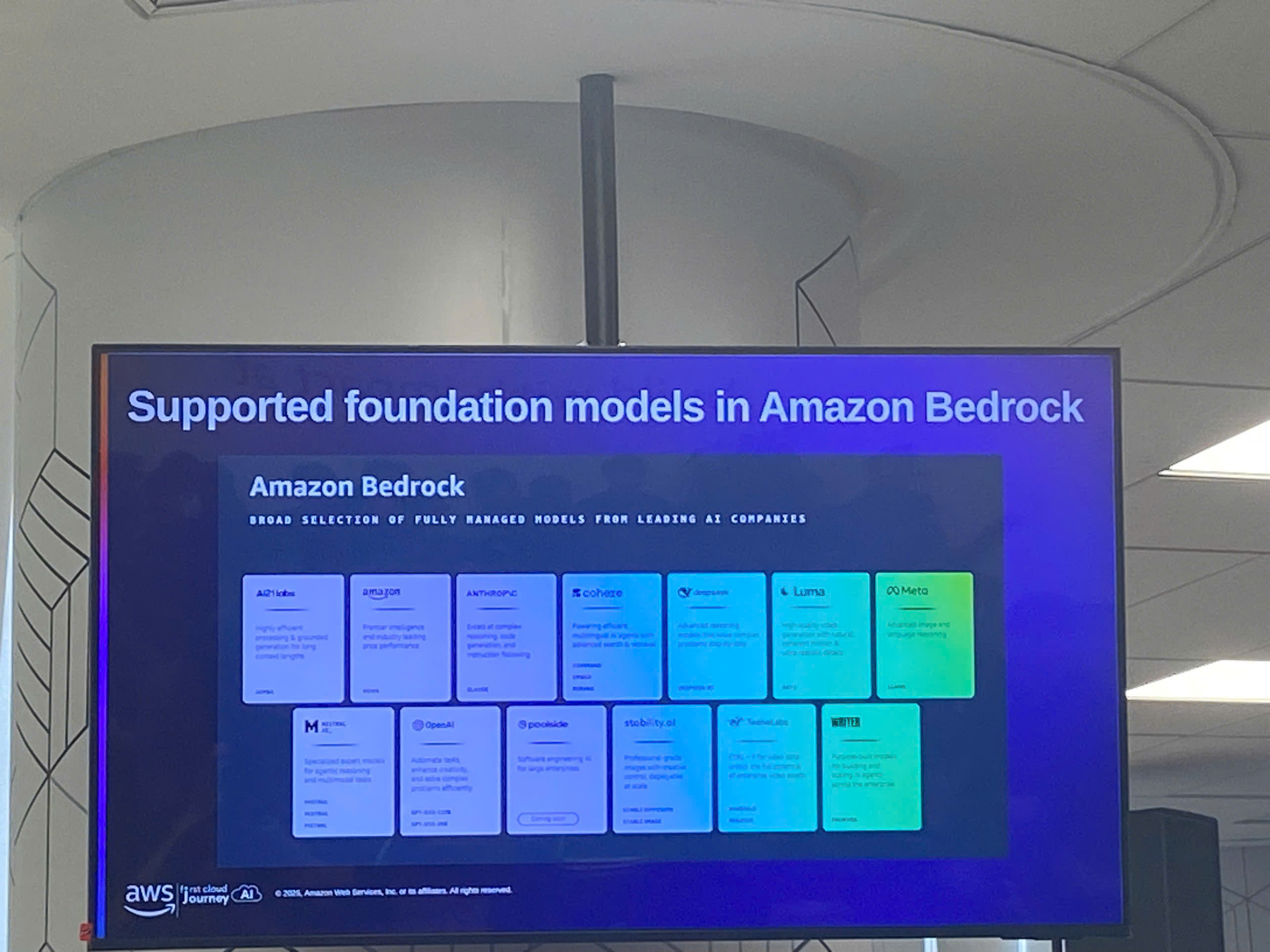

- Help participants understand how to choose Foundation Models (Claude, Llama, Titan) for different use cases

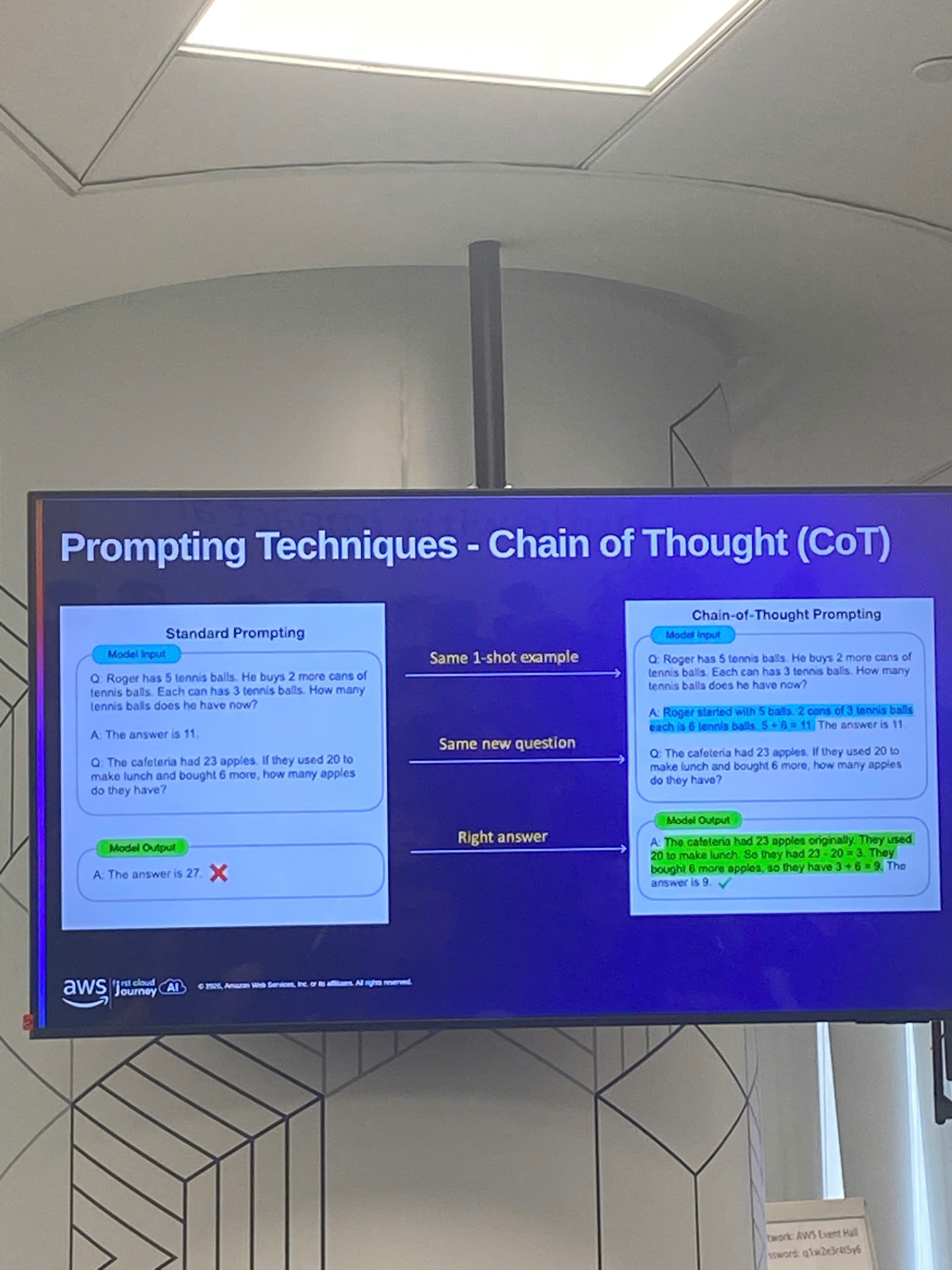

- Teach prompt engineering techniques (few-shot, structured prompting, reasoning patterns) for better outputs

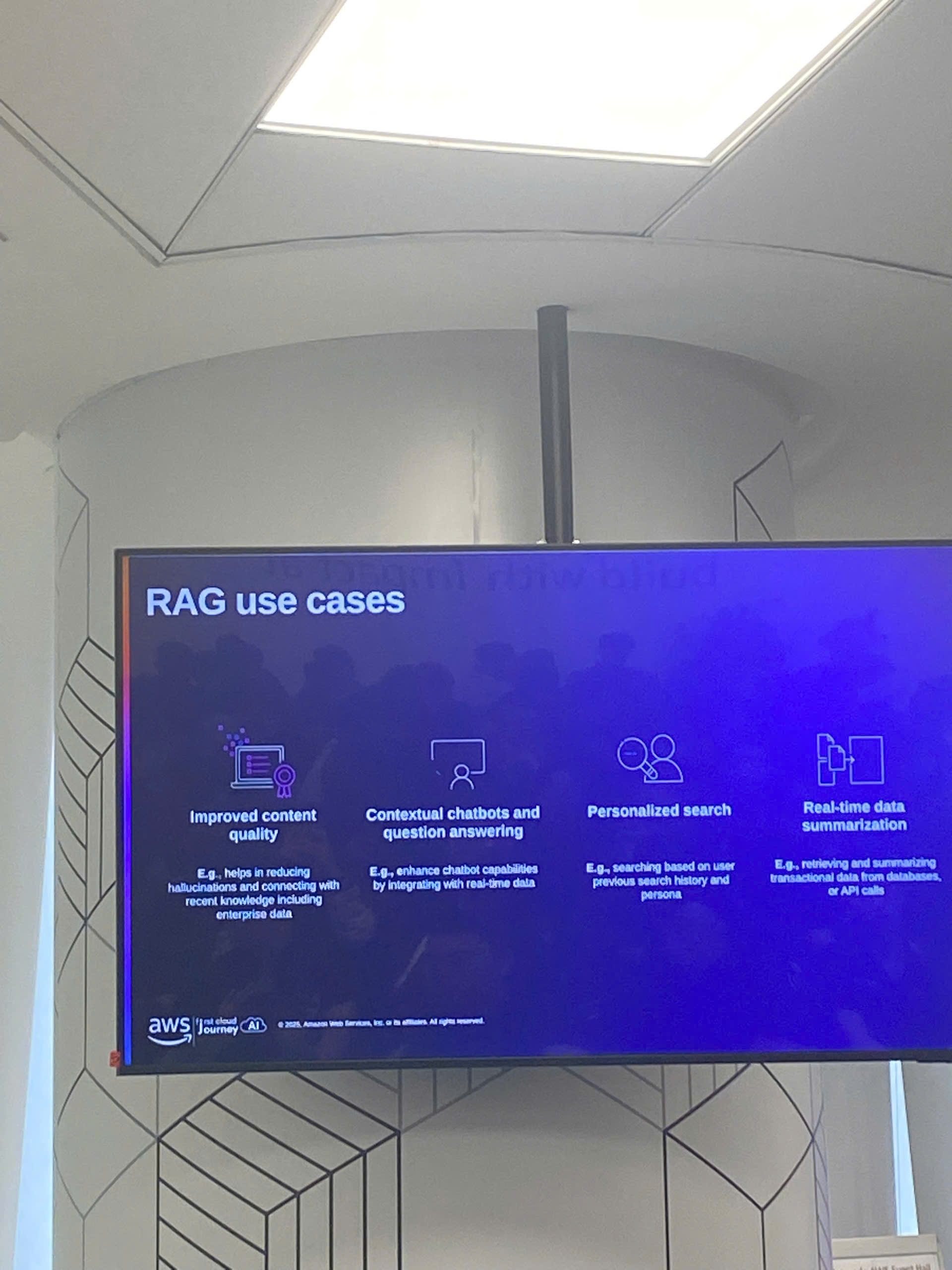

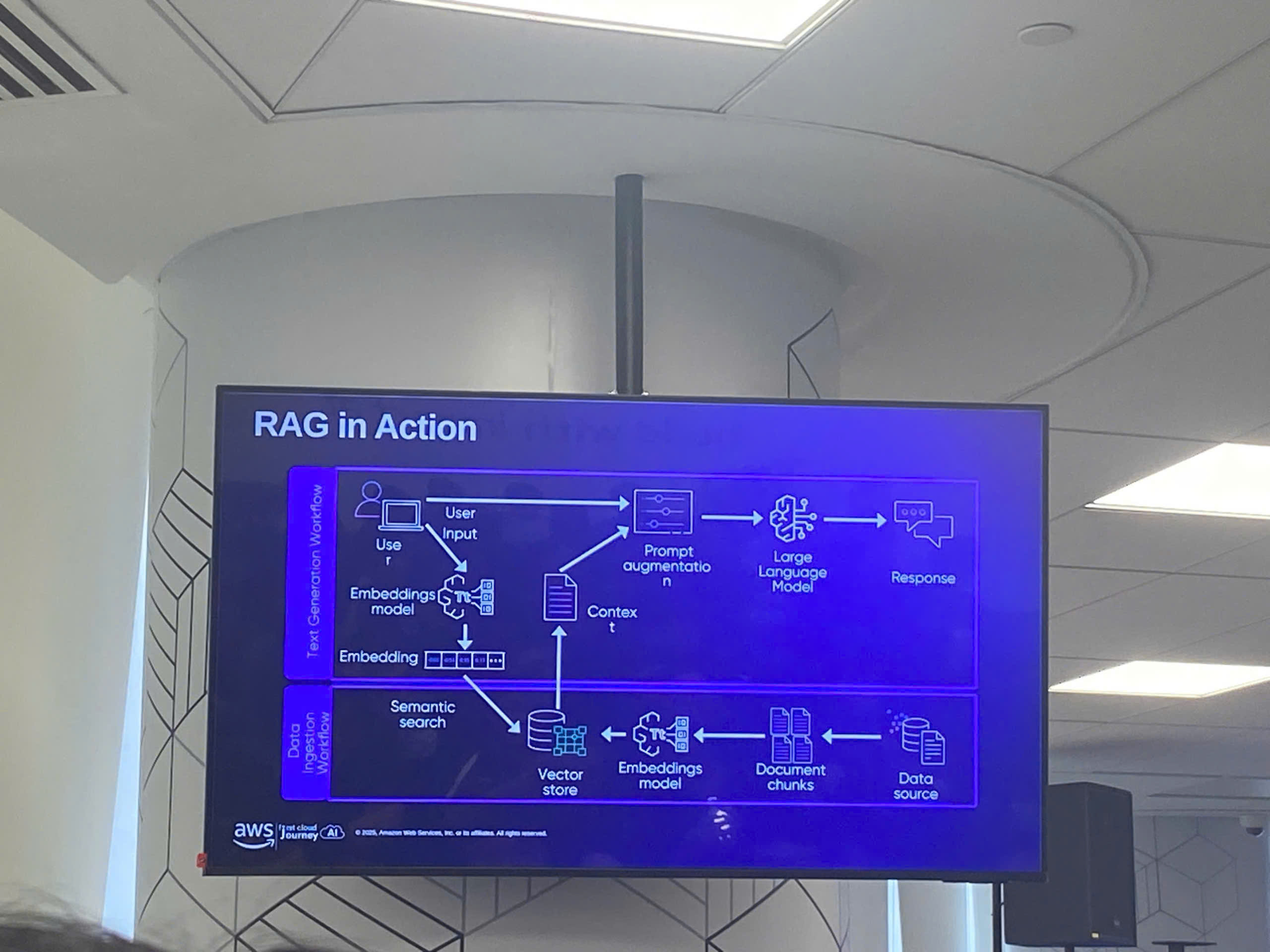

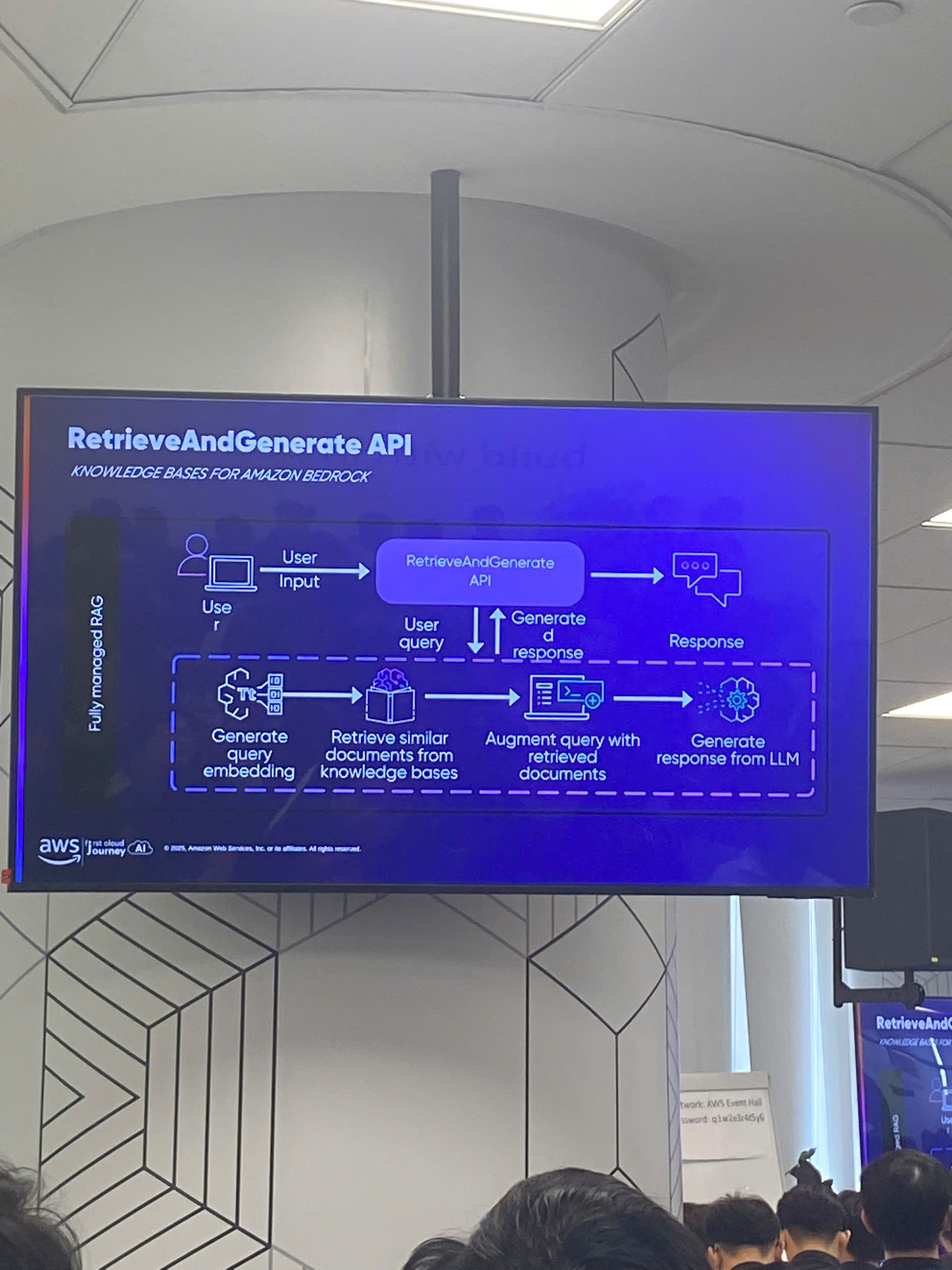

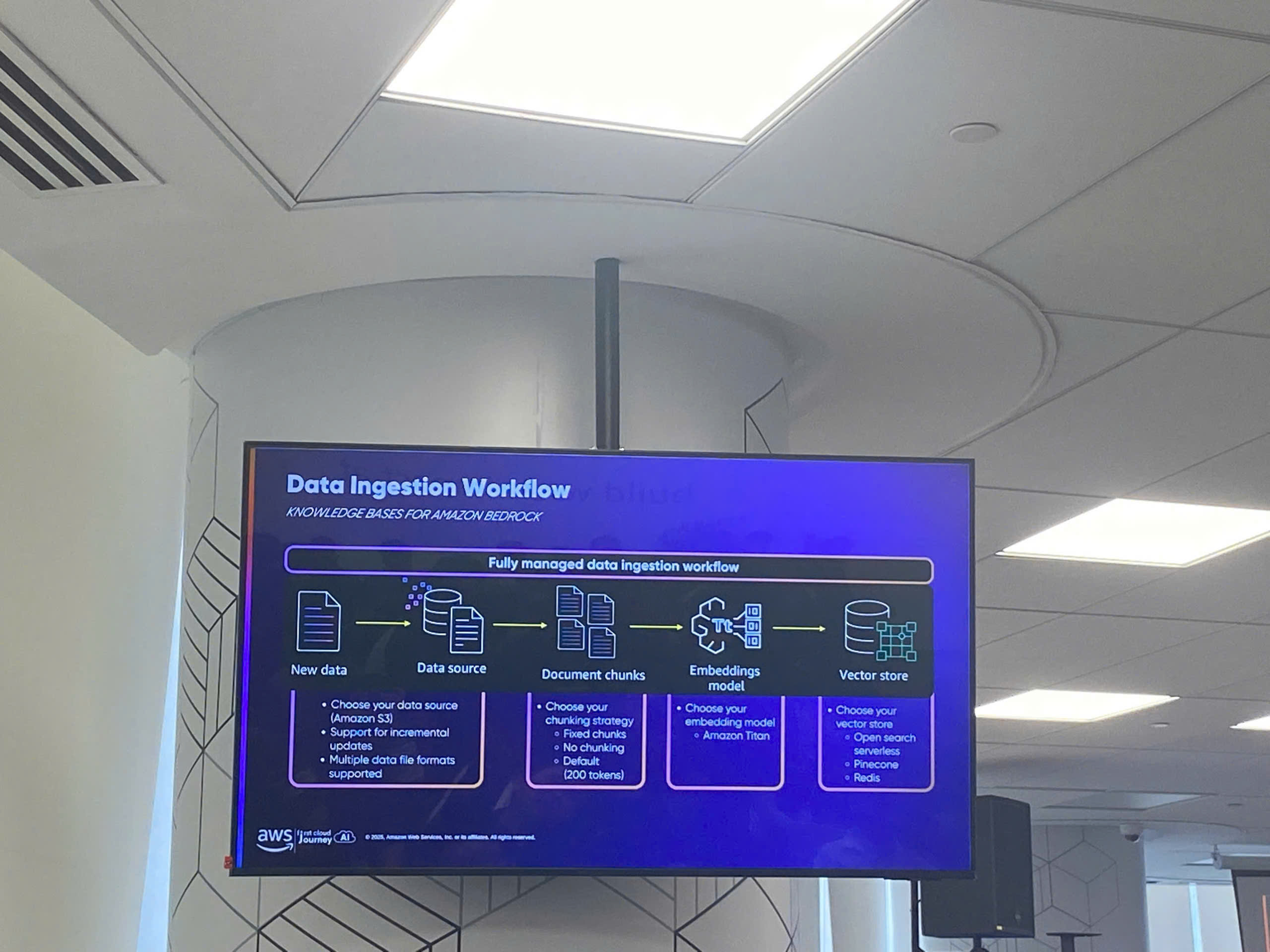

- Explain RAG architecture and how to integrate a Knowledge Base to improve factual accuracy

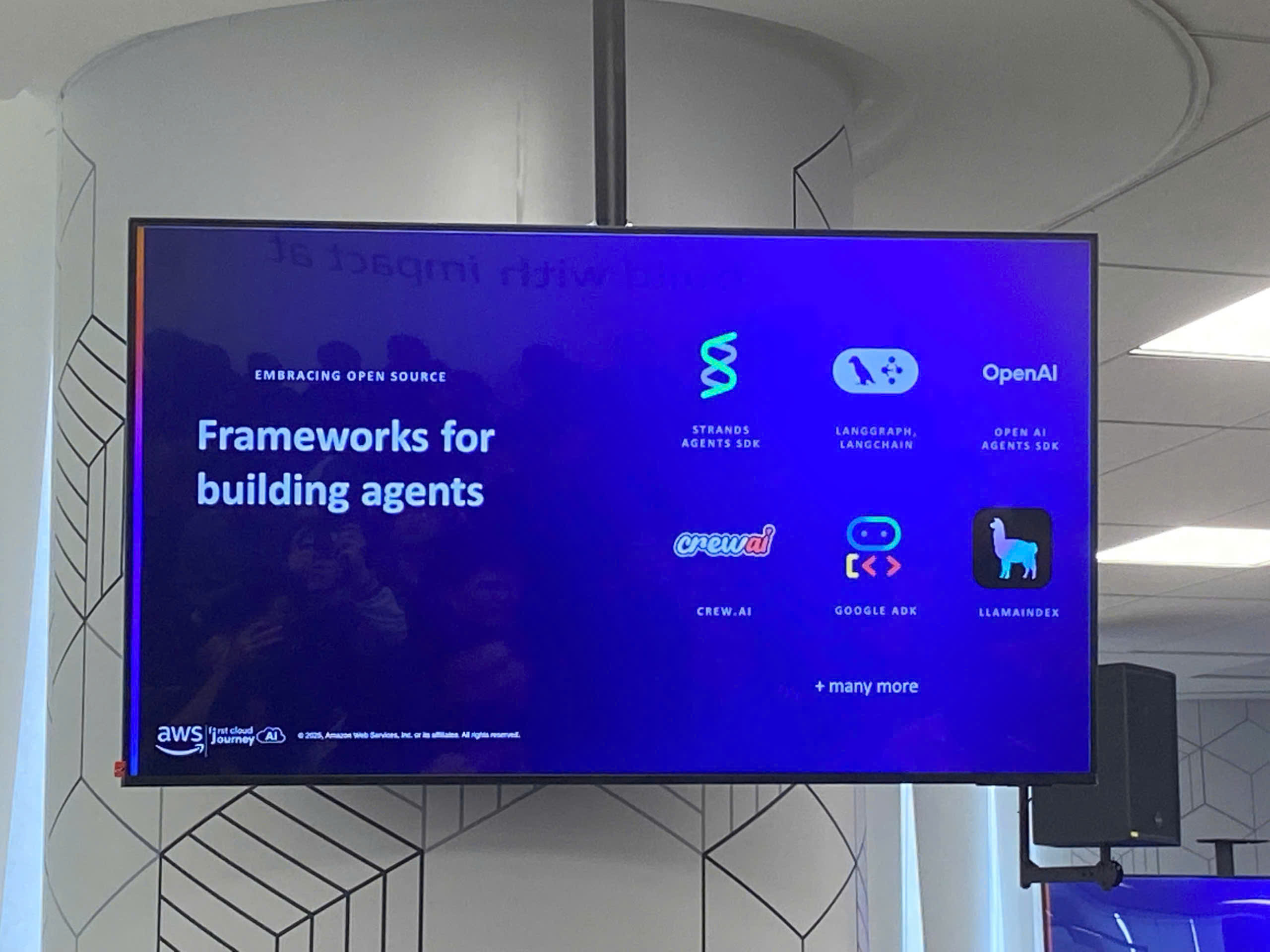

- Introduce Bedrock Agents for multi-step workflows and tool integrations

- Highlight safety practices using Guardrails for content filtering and policy control

- Demonstrate an end-to-end GenAI chatbot built with Amazon Bedrock

Speakers

- Hoàng Kha

- Hữu Nghị

- Hoàng Anh

Key Highlights

Welcome & Introduction

- Registration & networking: Participant check-in, networking, and connecting with other builders

- Workshop overview: Agenda walkthrough, goals for the half-day workshop, and learning outcomes

- Ice-breaker activity: Warm-up activity to align expectations and encourage interaction

- Speaker introduction: Session led by Hoàng Kha, Hữu Nghị, and Hoàng Anh

Generative AI with Amazon Bedrock

Foundation Models (FM): Claude, Llama, Titan - comparison and selection guide based on use case needs

Prompt Engineering: Techniques for better responses, including structured prompting and few-shot learning

Reasoning patterns: Applying step-based reasoning approaches to improve consistency for complex tasks

RAG (Retrieval-Augmented Generation): Architecture overview and Knowledge Base integration to ground answers

Bedrock Agents: Building multi-step workflows and integrating tools/actions for automation

Guardrails: Safety controls, policy enforcement, and content filtering for responsible GenAI applications

Live Demo: Building a Generative AI chatbot using Amazon Bedrock (end-to-end walkthrough)

Foundation Models (FM): Claude, Llama, Titan - comparison and selection guidance by use case

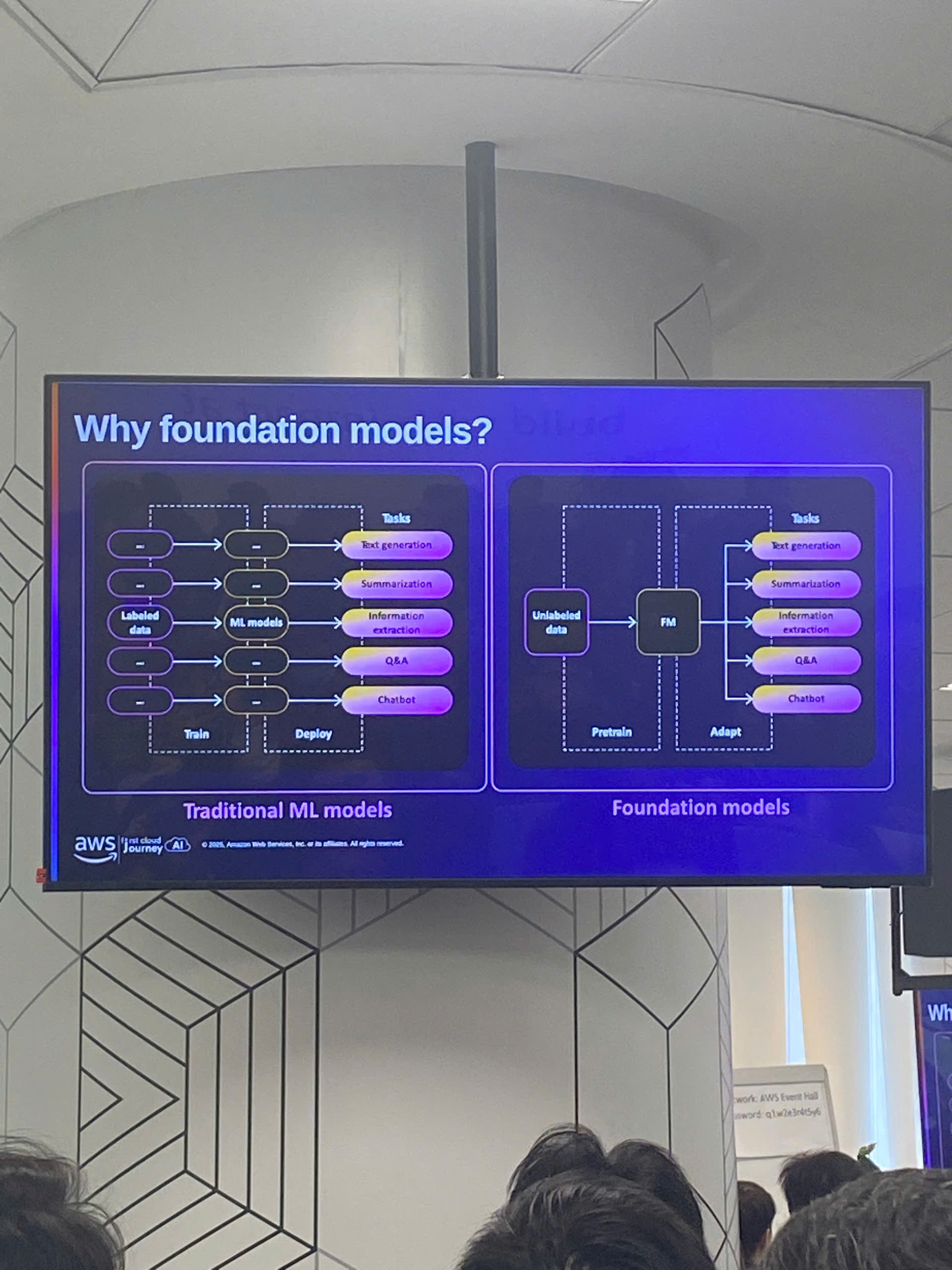

Traditional ML models: Labeled data + train + deploy a dedicated model for each task (text generation, summarization, information extraction, Q&A, chatbot).

Foundation models: Unlabeled data + pretrain FM + fine-tune/adapter for many tasks (text generation, summarization, information extraction, Q&A, chatbot).

- RAG (Retrieval-Augmented Generation): Architecture overview and Knowledge Base integration to ground answers

Key Takeaways

Design Mindset

- Use-case first: Start from the problem (chatbot, Q&A, automation) then choose the right model and architecture

- Grounding matters: For enterprise/knowledge-heavy use cases, accuracy depends on retrieval + context, not “bigger prompts”

- Safety by default: Responsible GenAI requires guardrails and policy controls from the beginning, not as an afterthought

Technical Architecture

- Model selection: Choose models based on cost, latency, quality, and alignment with task type (chat, reasoning, summarization)

- Prompt engineering: Use structured prompts and examples (few-shot) to reduce ambiguity and stabilize outputs

- RAG workflow: Retrieve relevant documents + inject context + generate answer + (optional) cite sources / log trace

- Agents & tools: Agents enable multi-step execution with tools (APIs, search, data lookup) for real automation

- Guardrails controls: Filter unsafe content, enforce policies, and reduce risk in production GenAI apps

Applying to Work

- Prototype a Bedrock chatbot: Start with a small scoped knowledge base (FAQs/docs) and measure answer quality

- Create a prompt library: Standardize prompts/templates for common tasks (summarize, classify, extract, Q&A)

- Add RAG for reliability: Move from “prompt-only” to RAG when accuracy and knowledge freshness matter

- Use agents selectively: Apply Bedrock Agents when workflows require multiple steps and tool calls

- Implement guardrails: Define policies, monitor outputs, and log prompts/responses for continuous improvement

Event Experience

Attending “AI/ML/GenAI on AWS” offered a hands-on view of how Amazon Bedrock can be used to build production-ready GenAI applications. The workshop structure made it easy to progress from fundamentals (model selection and prompting) to more advanced patterns like RAG, Agents, and Guardrails. The live demo helped connect the concepts into an end-to-end chatbot workflow that can be adapted to real internal knowledge use cases.